Deploying This Blog Site Using Serverless Technologies on AWS

Tech: React - GraphQL - Terraform - AWS

Posted: 2022-02-15

Last Updated: 2022-02-15

Using GitHub Actions to continuously deploy a Serverless blog site

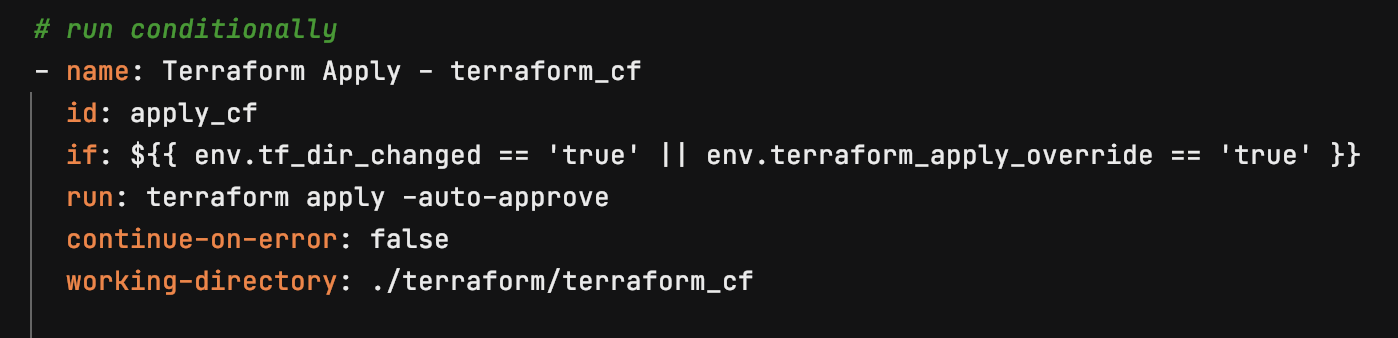

In this post I am going to cover how to use GitHub Actions to continuously build and deploy a serverless web application (this site) using Terraform, React, GraphQL, and several AWS services including Lambda, API Gateway, S3, SNS, Route53, Certificate Manager and CloudFront.

Diagram of what I am implementing:

To set this up yourself you need an AWS account, a Terraform Cloud account, a custom domain, basic React skills, basic Terraform skills, a working knowledge of GitHub Actions and of course the requisite amount of OCD needed to figure out the stuff I almost certainly left out (I wouldn't want to deny you the sweet pain of experience).

I started with the Create React App and built off of it How to set up a Git Hub Pages Blog Site Using React and MDX. The approach for using S3 to serve the static site assets is essentially the same as using GitHub pages but when you start to think about putting an API on the back end I think it's "easier" to use AWS.

A little background reading from around the internet:

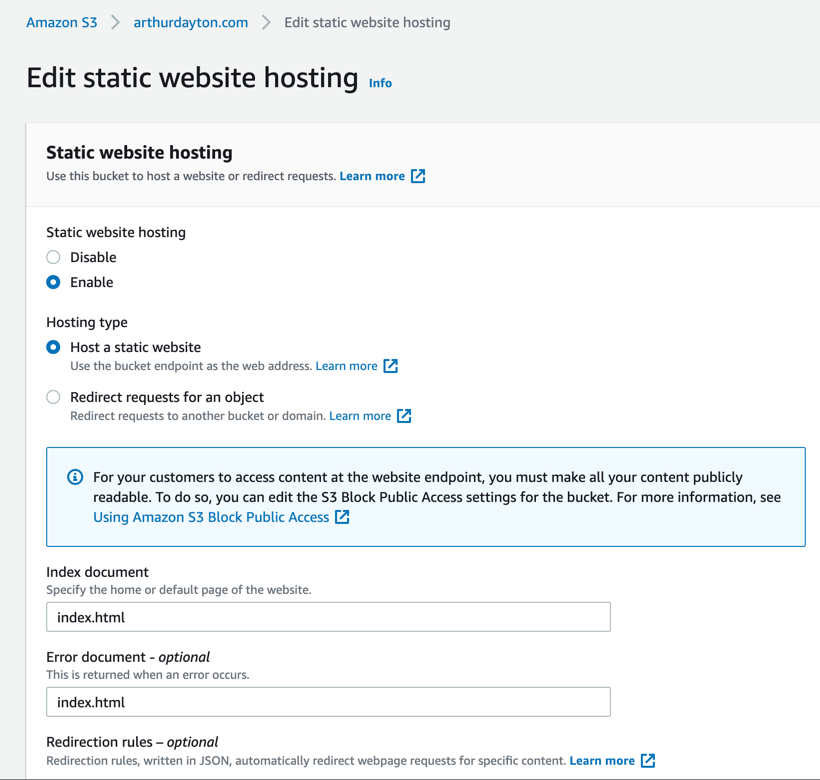

S3 Static Site Setup

S3 (Simple Storage Service) is definitely the the OG of AWS . It's cheap (It currrently costs me less than 5 cents a month), easy to use(to easy?), and pretty seamlessly integrates with lots of other AWS resources, such as Cloudfront.

CloudFront Setup

Cloudfront is a CDN which allows users to cache content around the world and automatically integrates with S3 and Route53 so we can easily map our domain to our content.

This post does a great job of explaining how to integrate S3 and Cloudfront

Building out components of architecture diagram

To build out my architecture diagram above I use Terraform to define my AWS resources, the AWS CLI to push my React code to my S3 bucket, and a GitHub Actions pipeline to deploy whenever I push changes to the main branch of my repository. My API is Apollo GraphQL (❤️🔥) for getting and submitting comments. It's running as a Lambda function (🤯) and using DynamoDB as a data store so that this site is essentially free to deploy and will autoscale to any degree of usage.

I could have easily used all AWS tools to do this, but I think there is good reason to stick with some tools that are Cloud agnostic as it becomes clearer every day that the enterprise cloud provider battle is going to work out like the enterprise database or development language battles (everybody is going to have pretty much everything).

Code Here

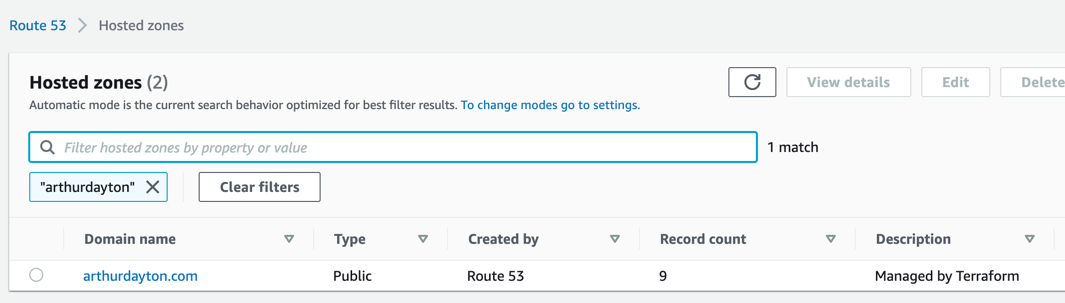

Hosted Zone

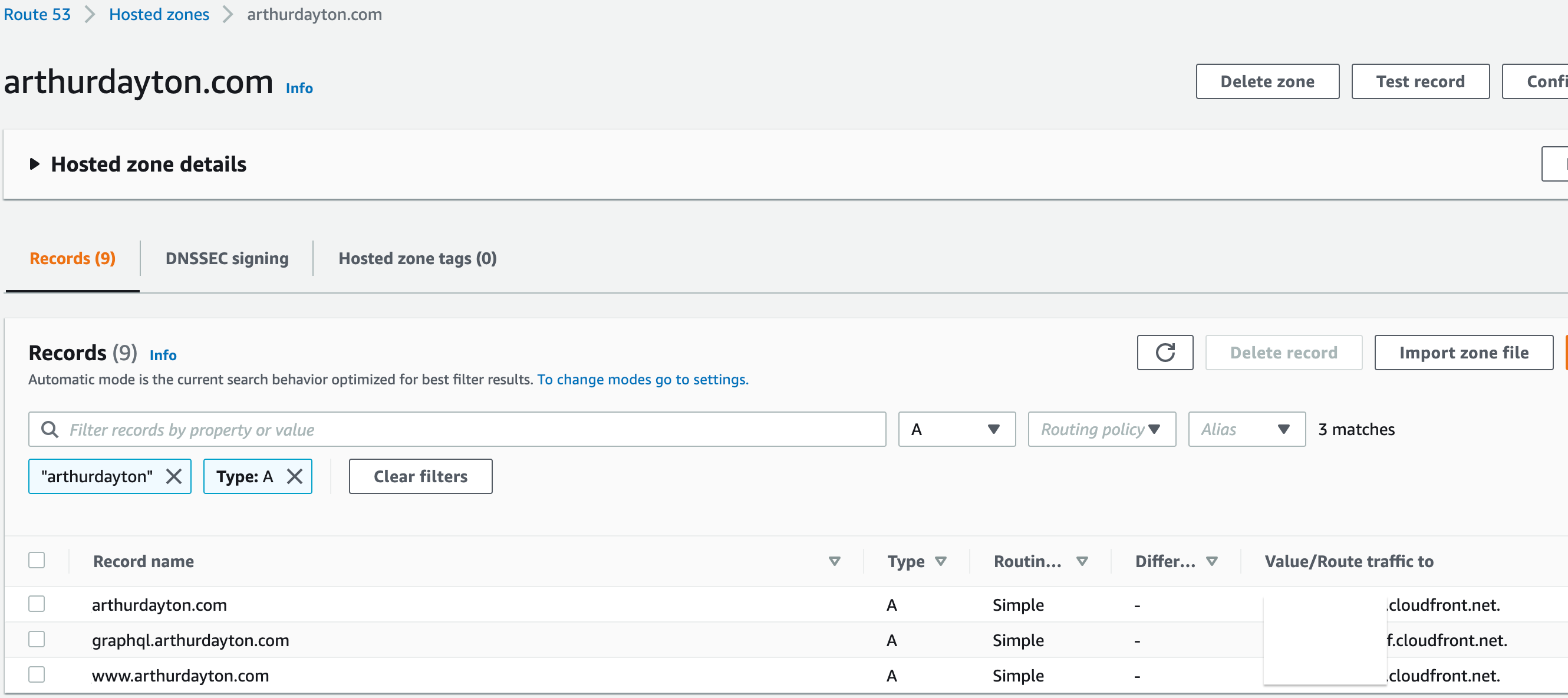

If I want to reach the world then I'm going to need a domain and it will need to support SSL/TLS certificates so I'm using Route53 and ACM (Amazon Certificate Manager) to purchase and renew both. My hosted zone in Route53 will route traffic to my Cloudfront distributions for my React site and my GraphQL endpoint. I will need to create those Cloudfront distributions of course, which I show below. As I go along, I will be creating Terraform files for the creation of all resources so that I can easily create and destroy on demand as well as make the process of deploying my site automated and reusable.

Basic Terraform code looks like this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21// hosted zone resource "aws_route53_zone" "main" { name = local.bucket_name } // gmail record resource "aws_route53_record" "gmail_mx" { zone_id = aws_route53_zone.main.zone_id name = local.bucket_name type = "MX" records = [ "1 ASPMX.L.GOOGLE.COM", "5 ALT1.ASPMX.L.GOOGLE.COM", "5 ALT2.ASPMX.L.GOOGLE.COM", "10 ALT3.ASPMX.L.GOOGLE.COM", "10 ALT4.ASPMX.L.GOOGLE.COM", ] ttl = "300" }The most important thing to remember, especially if you are switching what your domain points at, is that when you are sure it's not DNS it's probably DNS.

This shows my hosted zone in the AWS Console:

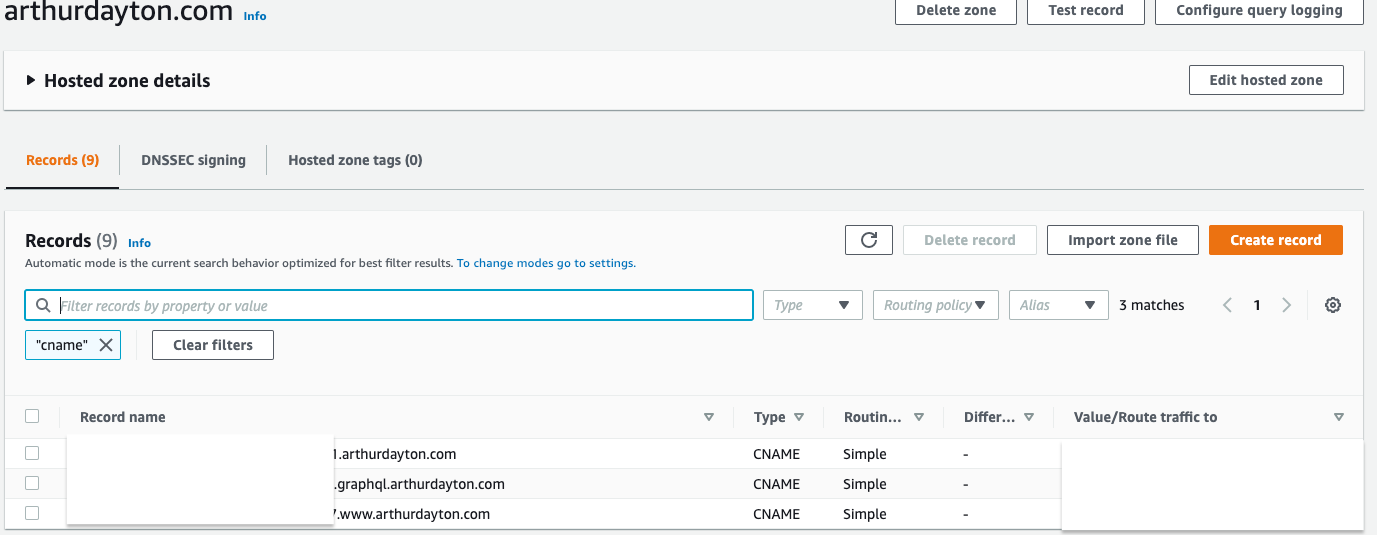

These are the records pointing to my cloudfront distributions:

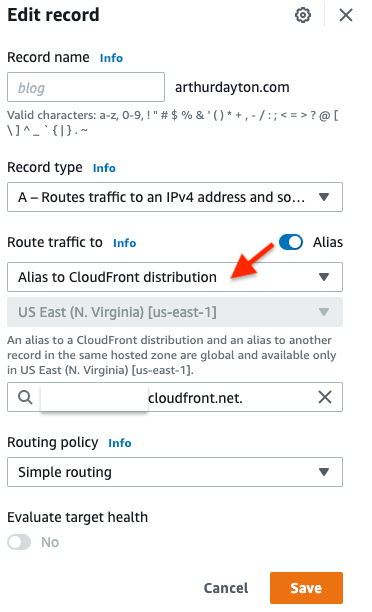

This shows the record detail and how to point to a Cloudfront distribution (I use Terrraform to create these when I deploy to Cloudfront below):

Certificate

Using ACM you can create a certificate for your domain and have it automatically validate by creating records in your hosted zone

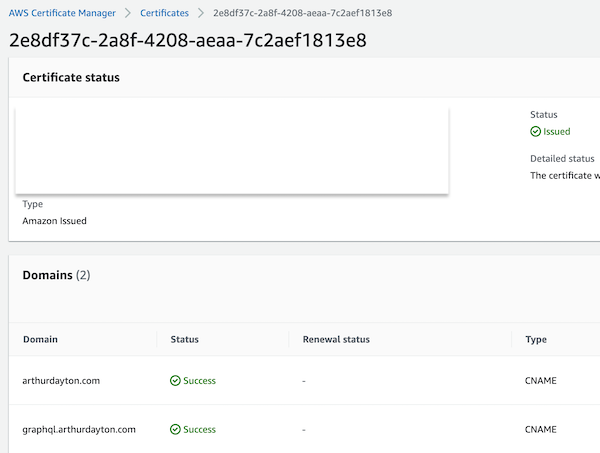

This shows the certificate status and domain validation status in the ACM panel:

This shows the records created by the validation process (we can use Terraform for this as well):

Terraform code:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63//domain certificate resource "aws_acm_certificate" "a" { domain_name = local.bucket_name subject_alternative_names = ["www.${local.bucket_name}"] validation_method = "DNS" } // validate that we have control over domain so our certificate is trusted resource "aws_acm_certificate_validation" "example" { certificate_arn = aws_acm_certificate.a.arn // loop route records which are the domain name and subject_alternative_names from aws_acm_certificate resource validation_record_fqdns = [for record in aws_route53_record.example : record.fqdn] } resource "aws_route53_record" "example" { for_each = { for dvo in aws_acm_certificate.a.domain_validation_options : dvo.domain_name => { name = dvo.resource_record_name record = dvo.resource_record_value type = dvo.resource_record_type zone_id = aws_route53_zone.main.zone_id } } allow_overwrite = true name = each.value.name records = [each.value.record] ttl = 60 type = each.value.type zone_id = each.value.zone_id } // graphql certificate resource "aws_acm_certificate" "graphql" { domain_name = local.bucket_name subject_alternative_names = ["graphql.${local.bucket_name}"] validation_method = "DNS" } resource "aws_acm_certificate_validation" "graphql" { certificate_arn = aws_acm_certificate.graphql.arn validation_record_fqdns = [for record in aws_route53_record.graphql : record.fqdn] } resource "aws_route53_record" "graphql" { for_each = { for dvo in aws_acm_certificate.graphql.domain_validation_options : dvo.domain_name => { name = dvo.resource_record_name record = dvo.resource_record_value type = dvo.resource_record_type zone_id = aws_route53_zone.main.zone_id } } allow_overwrite = true name = each.value.name records = [each.value.record] ttl = 60 type = each.value.type zone_id = each.value.zone_id }Cloudfront

After creating a Cloudfront distribution for my S3 bucket, that I set as an origin, I can point the name records in Route53 at Cloudfront which I showed in the Hosted Zone section above. As with everything else we can automate and manage these with Terraform.

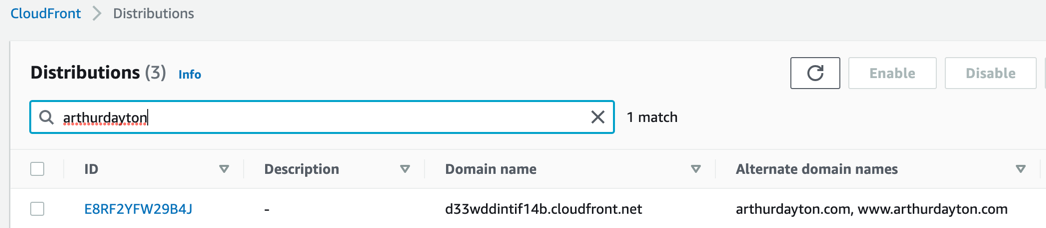

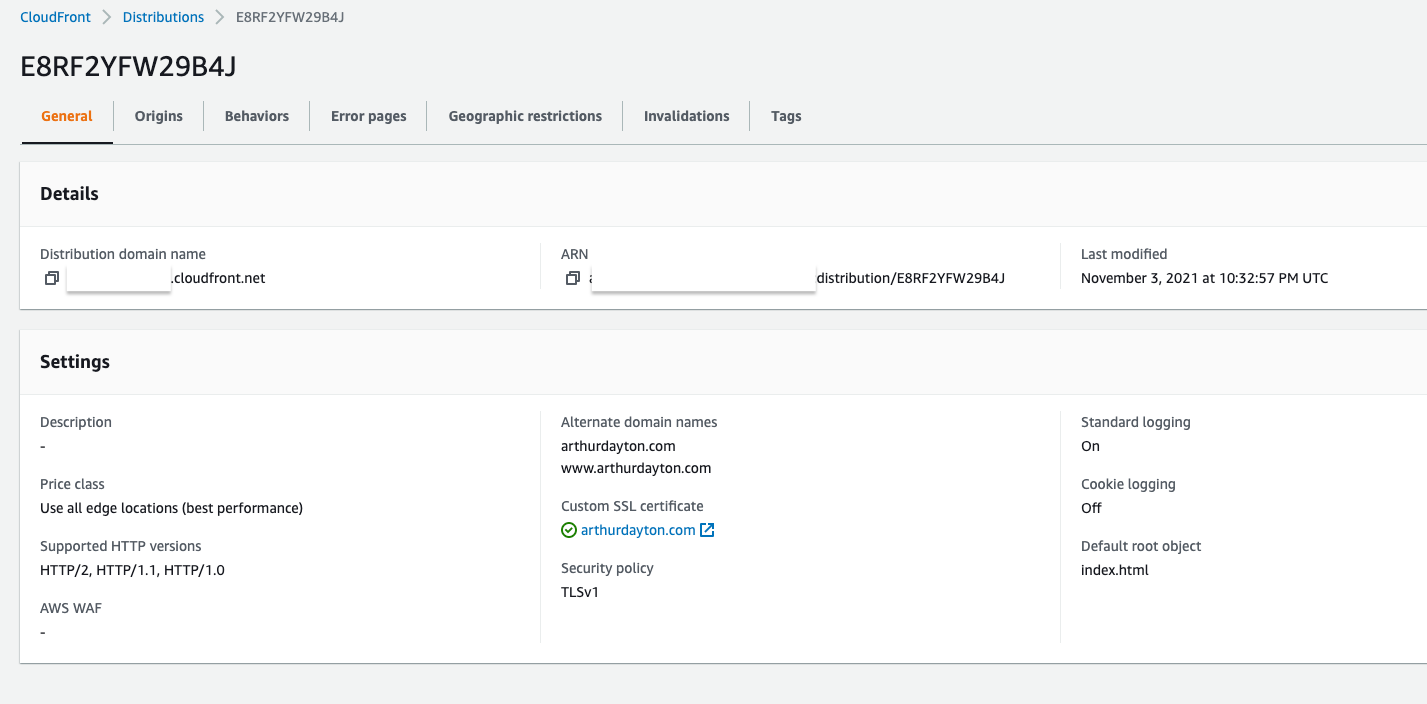

This shows my distribution for site assets:

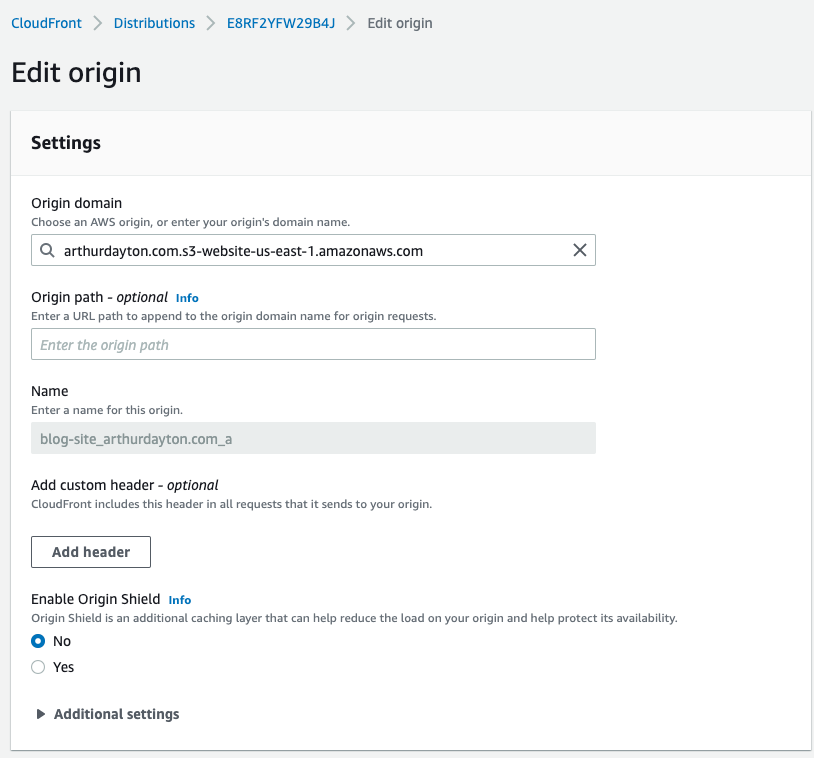

This shows the configured S3 bucket origin where my React build artifacts are pushed from the GitHub Actions pipeline that runs when I commit to my repository:

Terraform code:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97locals { s3_origin_id = "${local.resource_prefix}_${local.bucket_name}_a" } resource "aws_cloudfront_distribution" "s3_distribution_a" { origin { domain_name = local.s3_web_endpoint origin_id = local.s3_origin_id // https://github.com/hashicorp/terraform-provider-aws/issues/7847 custom_origin_config { http_port = "80" https_port = "443" origin_protocol_policy = "http-only" origin_ssl_protocols = ["TLSv1", "TLSv1.1", "TLSv1.2"] } } enabled = true is_ipv6_enabled = true // comment = "" default_root_object = "index.html" logging_config { include_cookies = false bucket = local.log_bucket_name prefix = local.resource_prefix } aliases = [local.bucket_name, local.alt_name] default_cache_behavior { allowed_methods = ["DELETE", "GET", "HEAD", "OPTIONS", "PATCH", "POST", "PUT"] cached_methods = ["GET", "HEAD"] target_origin_id = local.s3_origin_id forwarded_values { query_string = false cookies { forward = "none" } } viewer_protocol_policy = "https-only" min_ttl = 0 default_ttl = 3600 max_ttl = 86400 } price_class = "PriceClass_All" restrictions { geo_restriction { restriction_type = "none" } } tags = merge( local.base_tags, { Name = "${local.resource_prefix}-${local.bucket_name}" }, ) viewer_certificate { acm_certificate_arn = local.cert_arn ssl_support_method = "sni-only" } } // Create subdomain A record pointing to Cloudfront distribution resource "aws_route53_record" "www" { zone_id = local.zone_id name = "www.${local.bucket_name}" type = "A" alias { name = aws_cloudfront_distribution.s3_distribution_a.domain_name zone_id = aws_cloudfront_distribution.s3_distribution_a.hosted_zone_id evaluate_target_health = false } } // Create domain A record pointing to Cloudfront distribution resource "aws_route53_record" "a" { zone_id = local.zone_id name = local.bucket_name type = "A" alias { name = aws_cloudfront_distribution.s3_distribution_a.domain_name zone_id = aws_cloudfront_distribution.s3_distribution_a.hosted_zone_id evaluate_target_health = false } }S3 Buckets

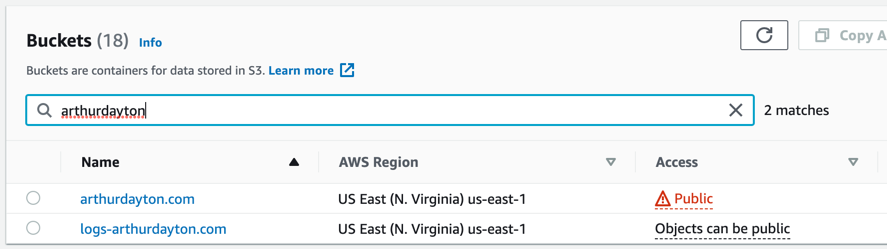

For this site I'm using 2 S3 buckets. One for the site assets that we will use as a source for our CloudFront distribution and one for logs and Athena query results.

This shows the buckets:

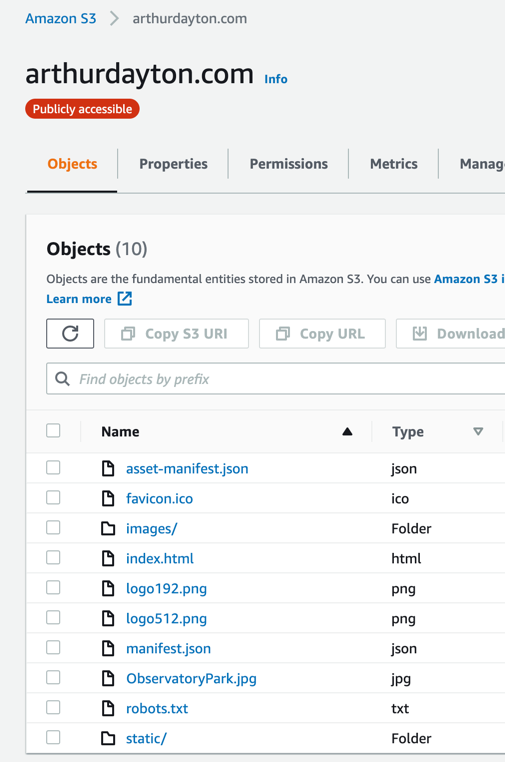

This shows the site assets from React build:

This shows relevant properties for site assets bucket:

Terraform code:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93// root domain bucket and policy // Clean up bucket for destroy aws s3 rm s3://bucketname --recursive resource "aws_s3_bucket" "a" { bucket = local.bucket_name // For a React site the index needs to be error document as well and React needs to handle errors website { index_document = "index.html" error_document = "index.html" } logging { target_bucket = aws_s3_bucket.log.id target_prefix = "s3-log/" } force_destroy = true tags = merge( local.base_tags, { Name = "${local.resource_prefix}-${local.bucket_name}" directory = basename(path.cwd) }, ) } resource "aws_s3_bucket" "log" { bucket = "logs-${local.bucket_name}" force_destroy = true grant { type = "Group" permissions = ["READ_ACP", "WRITE"] uri = "http://acs.amazonaws.com/groups/s3/LogDelivery" } //CloudFront // Permissions required to configure standard logging and to access your log files // https://docs.aws.amazon.com/AmazonCloudFront/latest/DeveloperGuide/AccessLogs.html grant { id = "c4c1ede66af53448b93c283ce9448c4ba468c9432aa01d700d3878632f77d2d0" permissions = ["FULL_CONTROL", ] type = "CanonicalUser" } grant { id = data.aws_canonical_user_id.current_user.id type = "CanonicalUser" permissions = ["FULL_CONTROL"] } tags = merge( local.base_tags, { Name = "${local.resource_prefix}-logs-${local.bucket_name}" }, ) } resource "aws_s3_bucket_object" "log_query_folder" { bucket = aws_s3_bucket.log.id acl = "private" key = "queryResults/" source = "/dev/null" } // This ensures that bucket content can be read publicly // I like setting it up this way so it's easier to test changes // Check here for different setups - https://aws.amazon.com/premiumsupport/knowledge-center/cloudfront-serve-static-website/ // Policy limits to specific bucket resource "aws_s3_bucket_policy" "a" { bucket = aws_s3_bucket.a.id policy = jsonencode({ Version = "2012-10-17" Id = "${local.resource_prefix}-${local.bucket_name}-policy" Statement = [ { Sid = "PublicRead" Effect = "Allow" Principal = "*" Action = [ "s3:GetObject", "s3:GetObjectVersion" ] Resource = [ aws_s3_bucket.a.arn, "${aws_s3_bucket.a.arn}/*", ] }, ] }) }GitHub Actions

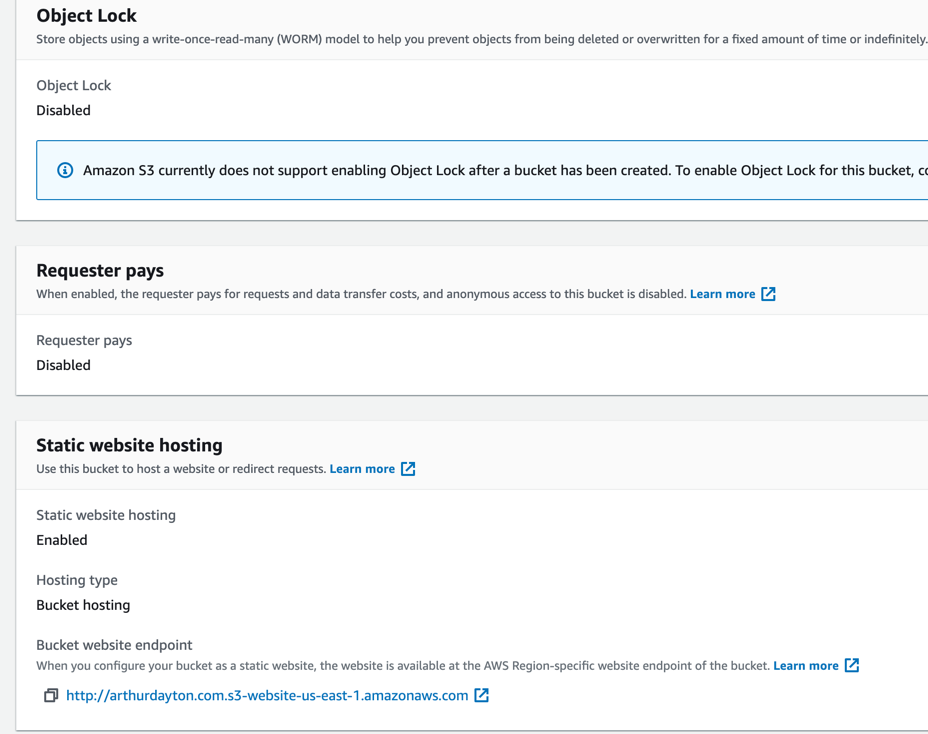

For my continuous integration I am using GitHub. Right now I am using the main branch and not a PR flow because it's just me and I can make it work with local dev and GitHub actions. In a context any larger than myself I would set up a separate dev domain either within a VPC or locked down by IP address. GitHub Actions is pretty awesome and it's been exciting watching it mature towards being an Enterprise ready tool. Care and feeding of architecture is painful and having this capability delivered right to my GitHub repository is awesome! If you've used Azure Devops (lately) then it will look and feel very familiar.

Environment

First thing I needed to do was set up an environment in Actions so I can store secrets:

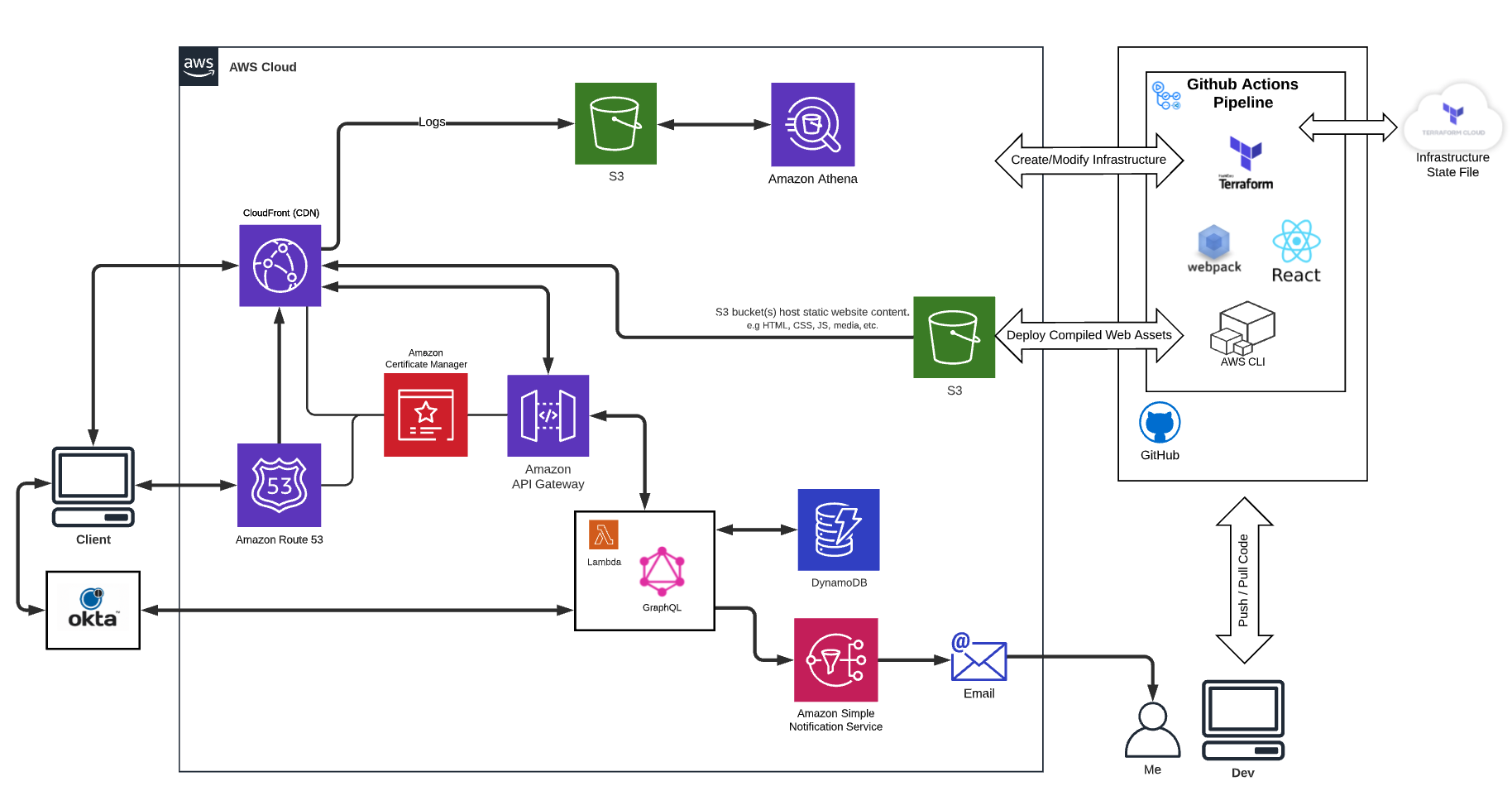

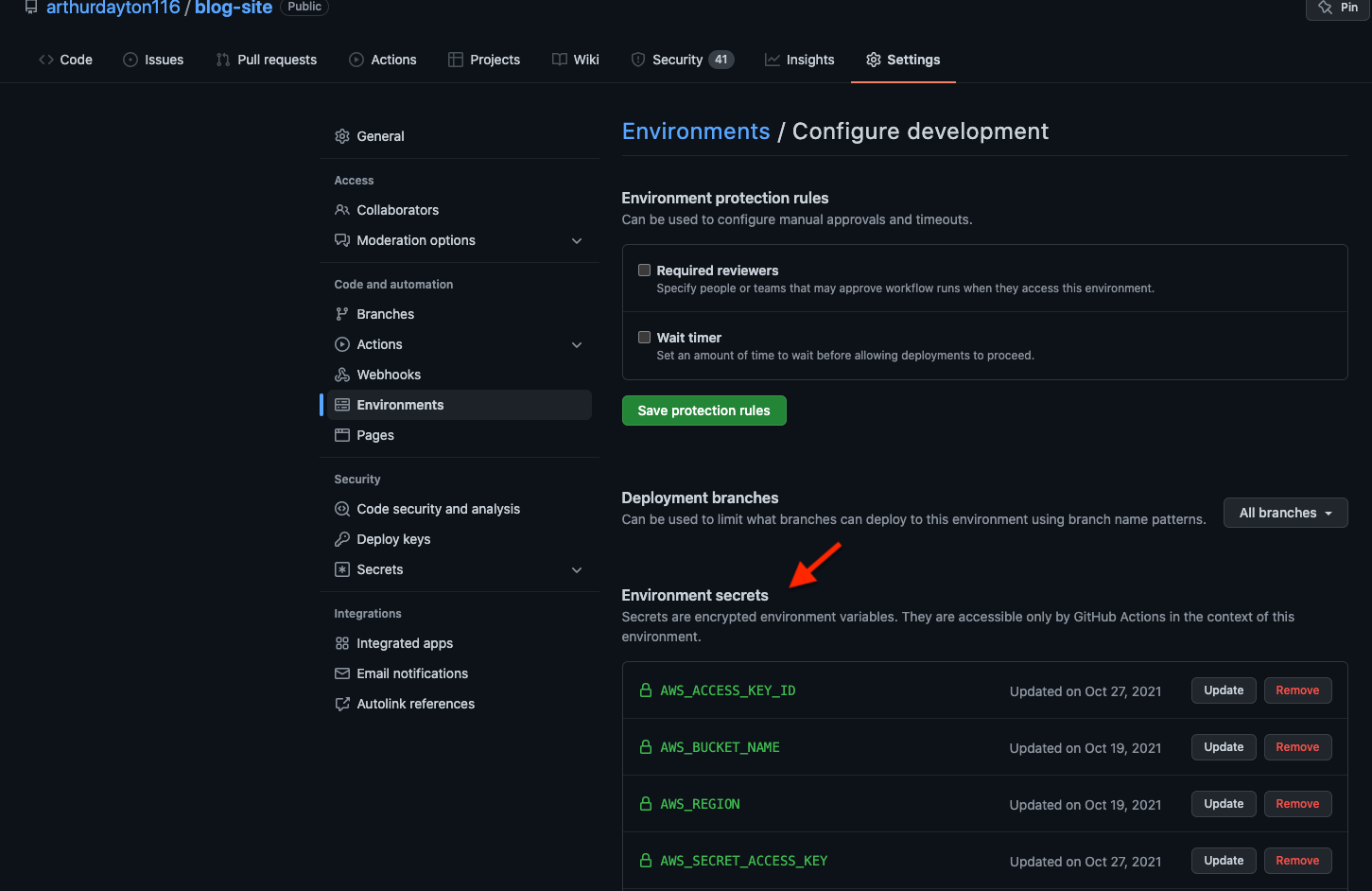

Actions Workflow

Then I can set up the yaml file that will define my workflow that runs whenever I commit code. GitHub has a default location for the file:

I reference my environment and then I can pull secrets into my build job runs securely:

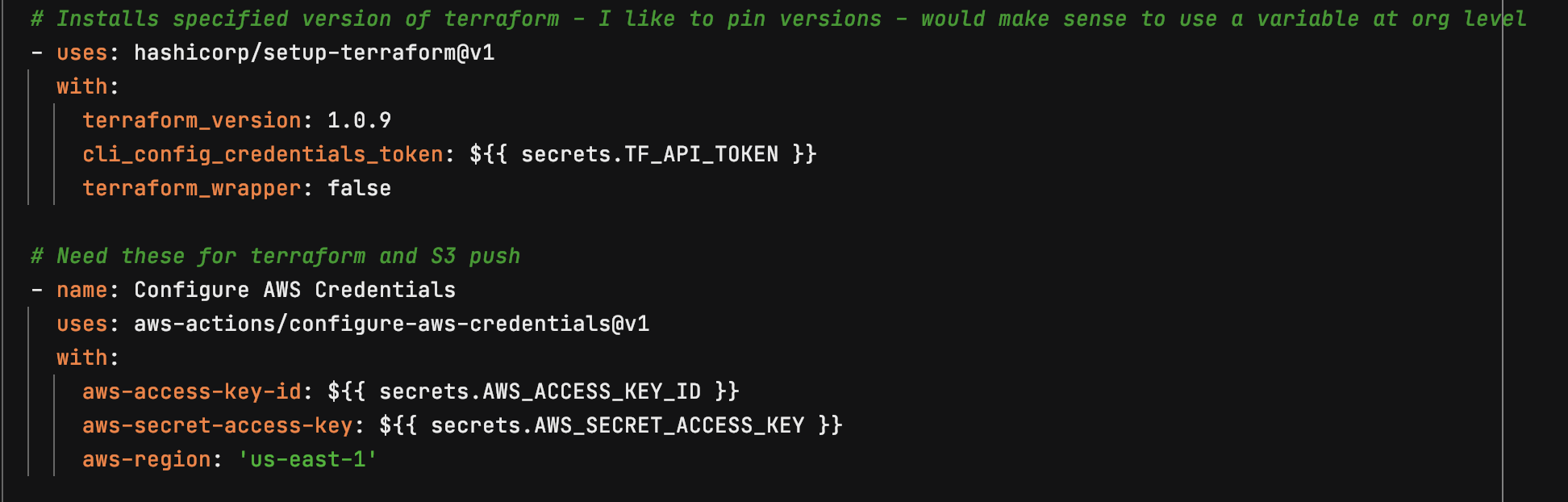

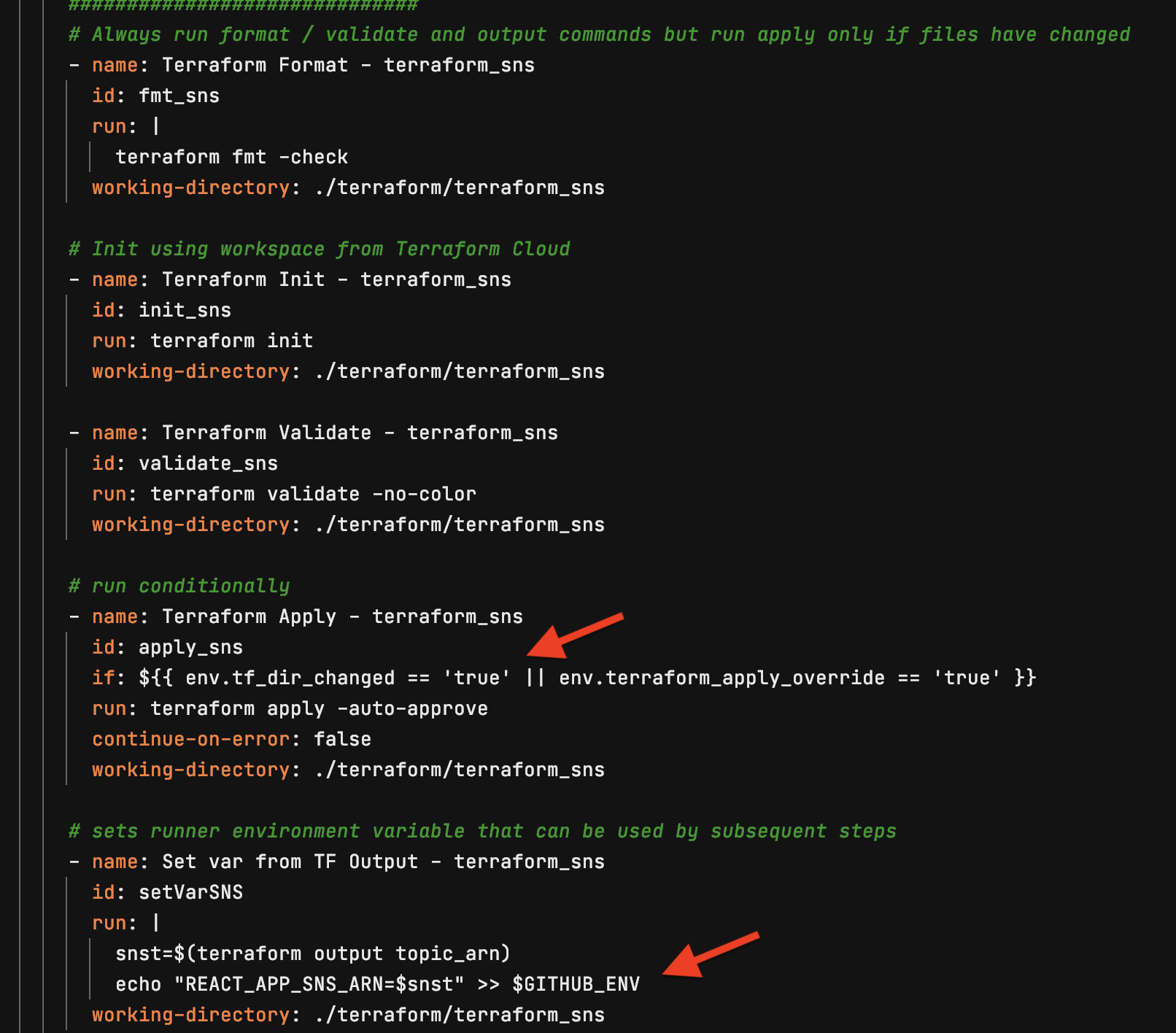

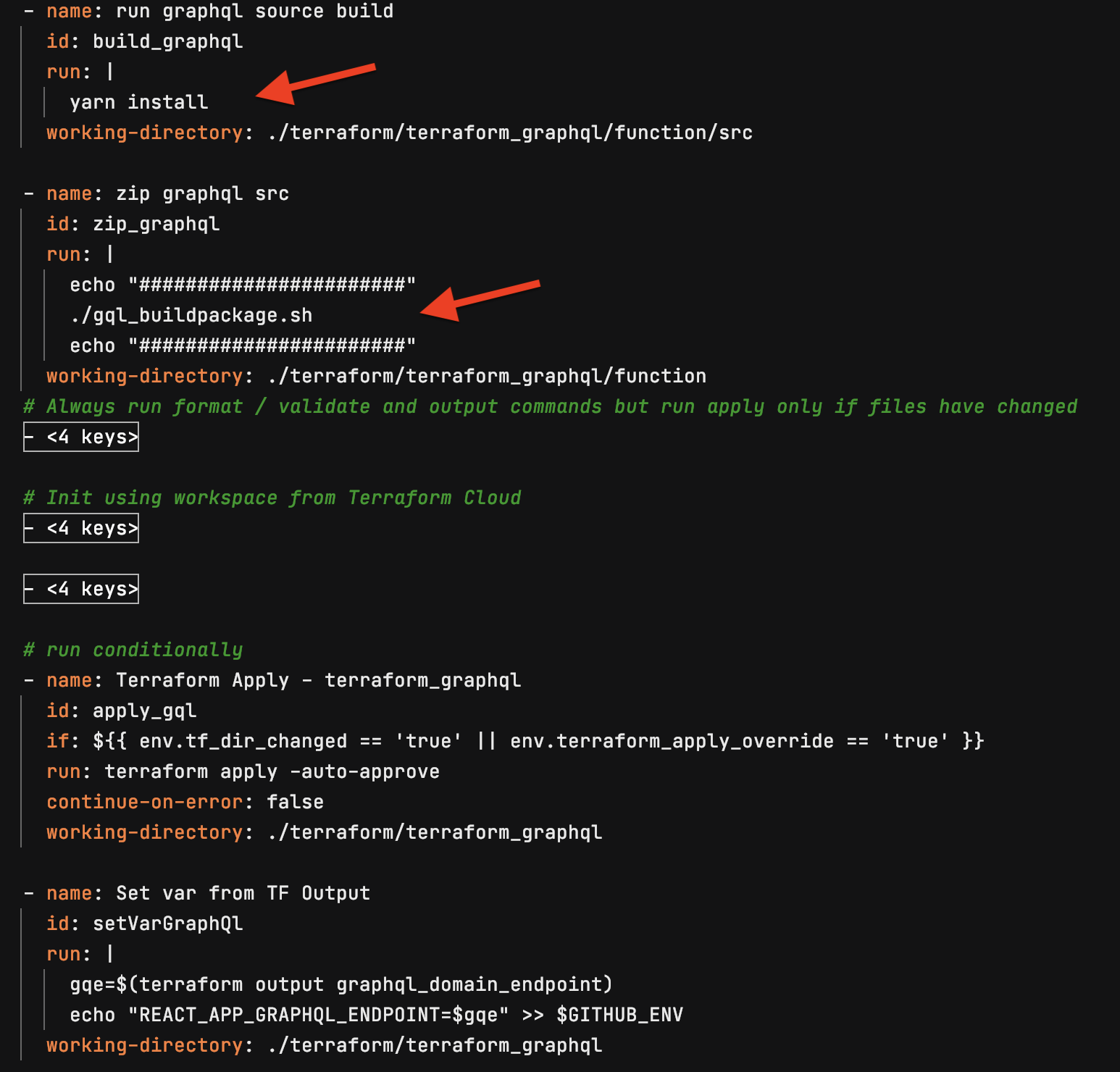

Now I can create all the steps needed to build this application from top to bottom:

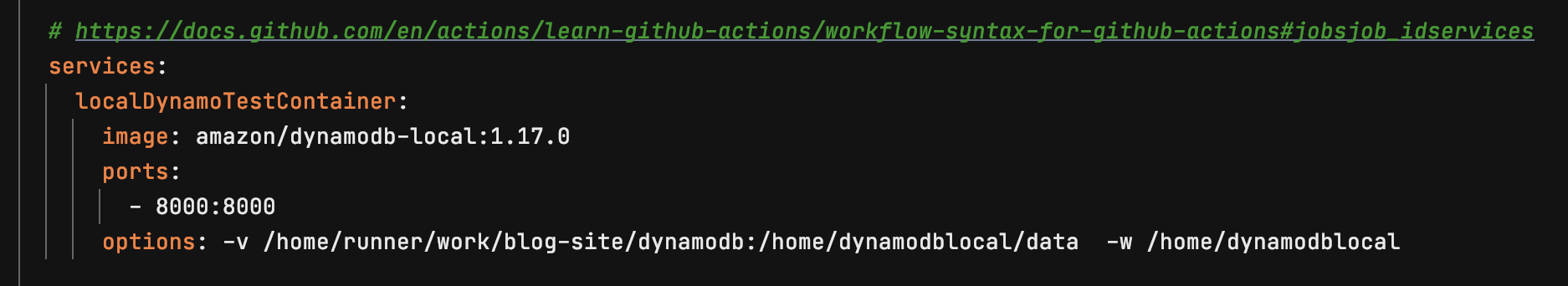

Set up dynamodb container for automated tests

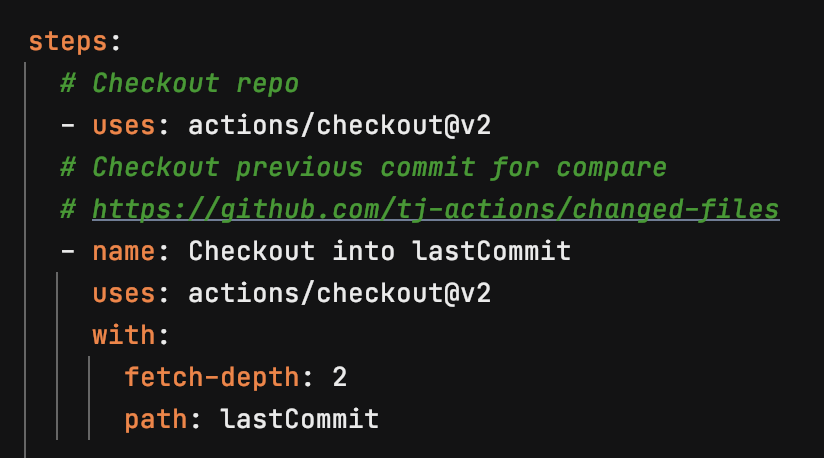

Check out previous commit so we can see what files have changed and only run certain steps

Set up Terraform

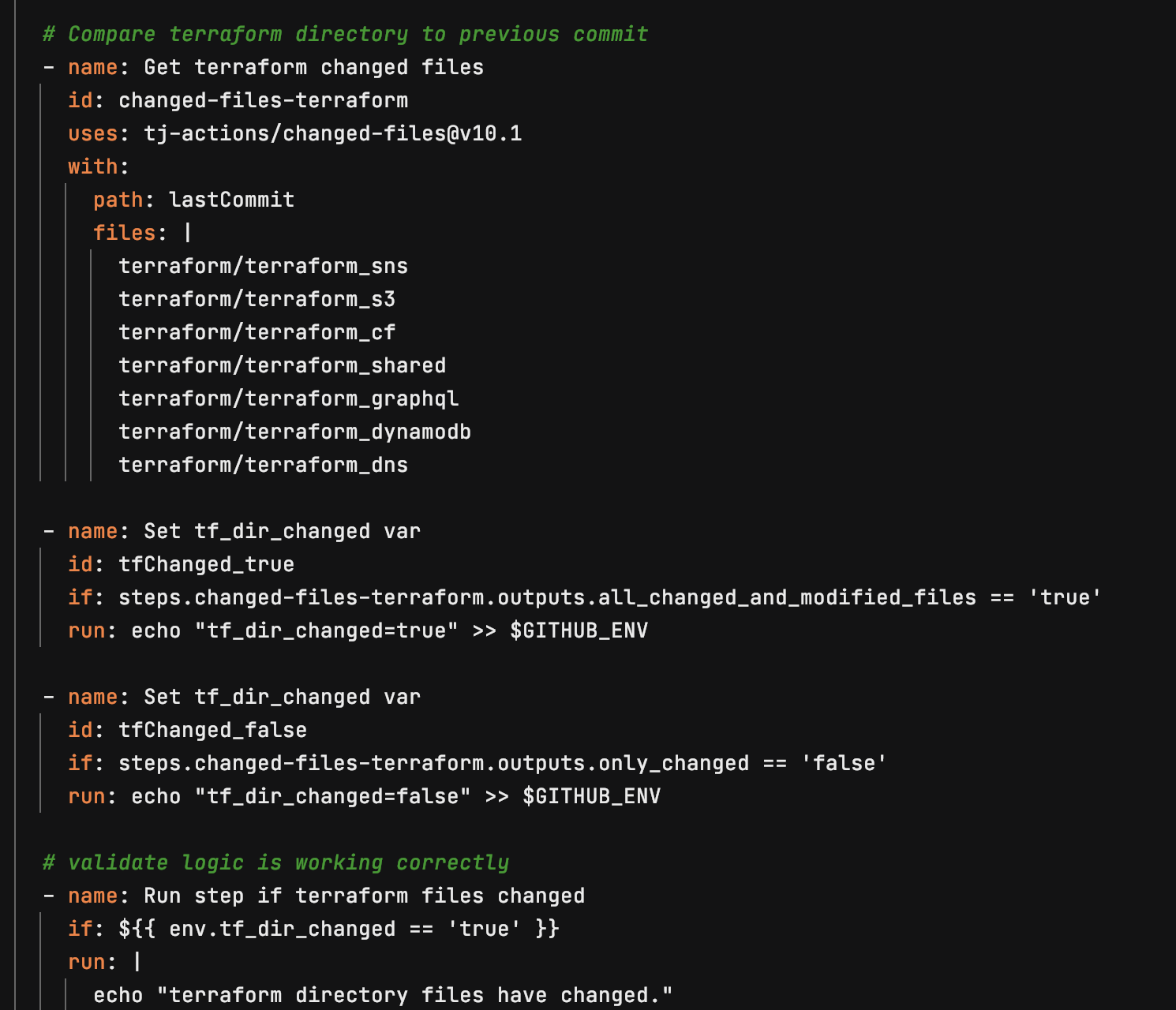

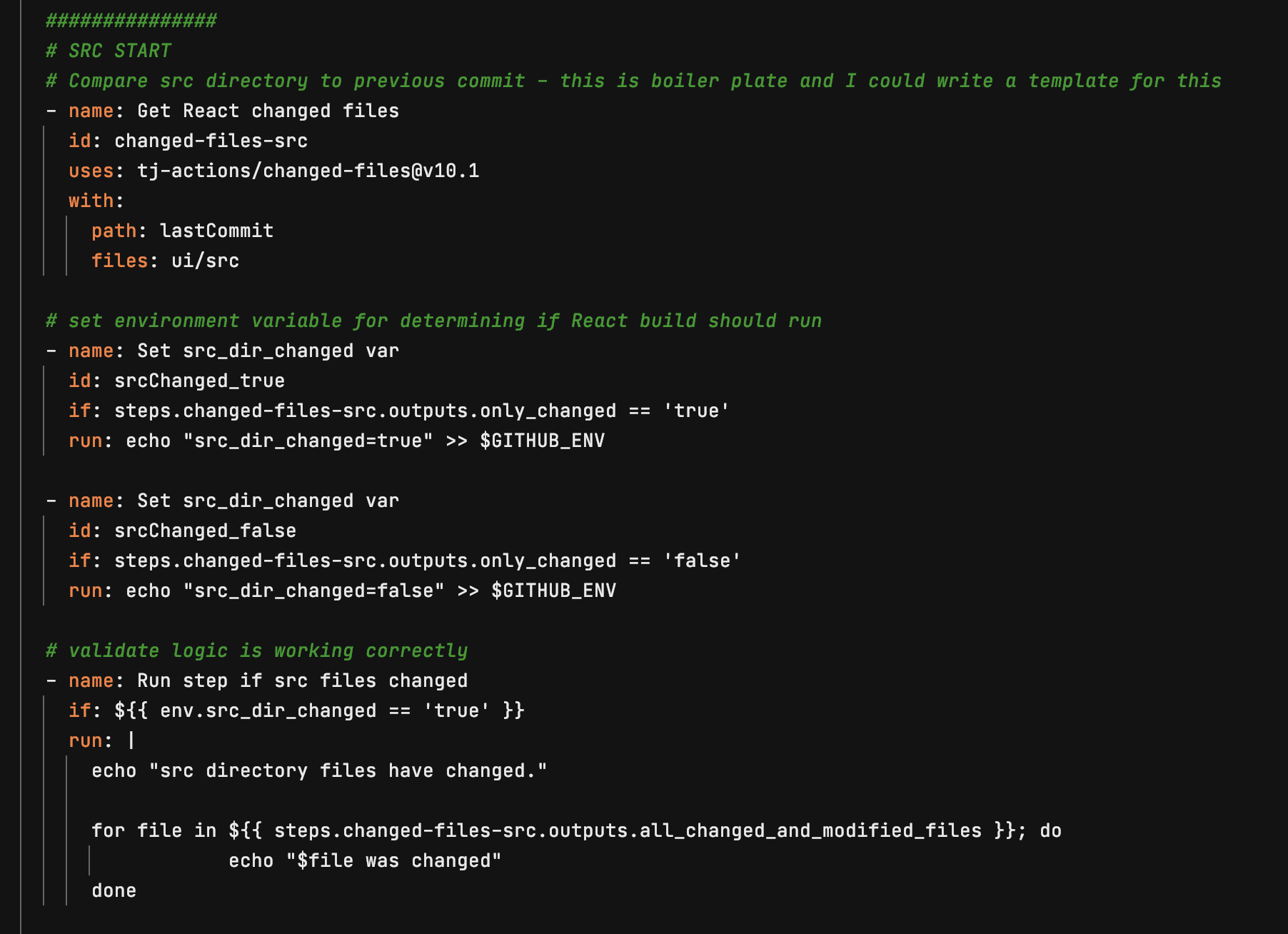

Run Terraform apply if files have changed and set and environment variables needed for following steps

Build graphql code, zip for Lambda and create Lambda

API Gateway Terraform:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111# Create an API endpoint for graphql lambda resource "aws_api_gateway_rest_api" "gql" { name = "BlogGraphql" description = "Terraform Serverless GraphQL Example" } resource "aws_api_gateway_account" "gql" { cloudwatch_role_arn = aws_iam_role.cloudwatch.arn } resource "aws_iam_role" "cloudwatch" { name = "${local.resource_prefix}-api_gateway_cloudwatch_global_gql" assume_role_policy = jsonencode({ Version = "2012-10-17" "Statement" : [ { Sid : "", Effect : "Allow", Principal : { Service : "apigateway.amazonaws.com" }, Action : "sts:AssumeRole" } ] }) } resource "aws_iam_role_policy_attachment" "cloudwatch_gql" { policy_arn = "arn:aws:iam::aws:policy/service-role/AmazonAPIGatewayPushToCloudWatchLogs" role = aws_iam_role.cloudwatch.name } // Creates method in api gateway for graphql // https://docs.aws.amazon.com/apigateway/latest/developerguide/set-up-lambda-proxy-integrations.html resource "aws_api_gateway_resource" "gql_method" { rest_api_id = aws_api_gateway_rest_api.gql.id parent_id = aws_api_gateway_rest_api.gql.root_resource_id path_part = "graphql" } resource "aws_api_gateway_method" "proxy" { rest_api_id = aws_api_gateway_rest_api.gql.id resource_id = aws_api_gateway_resource.gql_method.id http_method = "POST" authorization = "NONE" } // point api to lambda for execution resource "aws_api_gateway_integration" "lambda" { rest_api_id = aws_api_gateway_rest_api.gql.id resource_id = aws_api_gateway_method.proxy.resource_id http_method = aws_api_gateway_method.proxy.http_method integration_http_method = "POST" type = "AWS_PROXY" uri = aws_lambda_function.gql_lambda.invoke_arn } resource "aws_api_gateway_integration" "lambda_root" { rest_api_id = aws_api_gateway_rest_api.gql.id resource_id = aws_api_gateway_method.proxy_root.resource_id http_method = aws_api_gateway_method.proxy_root.http_method integration_http_method = "POST" type = "AWS_PROXY" uri = aws_lambda_function.gql_lambda.invoke_arn } resource "aws_api_gateway_deployment" "gql" { depends_on = [ aws_api_gateway_integration.lambda, aws_api_gateway_integration.lambda_root, ] rest_api_id = aws_api_gateway_rest_api.gql.id stage_name = local.stage_name } resource "aws_api_gateway_method" "proxy_root" { rest_api_id = aws_api_gateway_rest_api.gql.id resource_id = aws_api_gateway_rest_api.gql.root_resource_id http_method = "ANY" authorization = "NONE" } // Create a custom domain for api resource "aws_api_gateway_domain_name" "graphql" { certificate_arn = local.graphql_cert_arn domain_name = "graphql.${local.bucket_name}" } // This creates an A record in Route 53 for graphql subdomain resource "aws_route53_record" "graphql" { name = aws_api_gateway_domain_name.graphql.domain_name type = "A" zone_id = local.zone_id alias { evaluate_target_health = true name = aws_api_gateway_domain_name.graphql.cloudfront_domain_name zone_id = aws_api_gateway_domain_name.graphql.cloudfront_zone_id } } // this maps the custom domain to the api resource "aws_api_gateway_base_path_mapping" "example" { api_id = aws_api_gateway_rest_api.gql.id stage_name = local.stage_name domain_name = aws_api_gateway_domain_name.graphql.domain_name }Lambda Terraform:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130// attach policy to role resource "aws_iam_role_policy_attachment" "sns" { role = aws_iam_role.iam_for_lambda.name policy_arn = aws_iam_policy.gql_sns.arn } resource "aws_iam_role_policy_attachment" "dynamoDb" { role = aws_iam_role.iam_for_lambda.name policy_arn = aws_iam_policy.gql_dynamoDb.arn } resource "aws_iam_role_policy_attachment" "basic" { policy_arn = "arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole" role = aws_iam_role.iam_for_lambda.name } // create policy resource "aws_iam_policy" "gql_dynamoDb" { name = "${local.resource_prefix}-gql-dynamodb" description = "Policy for dynamo db access" policy = data.aws_iam_policy_document.gql_dynamoDb.json } // create policy document data "aws_iam_policy_document" "gql_dynamoDb" { statement { actions = [ "dynamodb:BatchGetItem", "dynamodb:GetItem", "dynamodb:Query", "dynamodb:Scan", "dynamodb:BatchWriteItem", "dynamodb:PutItem", "dynamodb:UpdateItem", "dynamodb:DescribeTable" ] effect = "Allow" resources = [local.dynamo_arn, "${local.dynamo_arn}/index/*"] } } // create policy resource "aws_iam_policy" "gql_sns" { name = "${local.resource_prefix}-gql-sns" description = "Policy for sns publish" policy = data.aws_iam_policy_document.gql_sns.json } data "aws_iam_policy_document" "gql_sns" { statement { actions = [ "sns:Publish" ] effect = "Allow" resources = [local.sns_arn] } } resource "aws_iam_role" "iam_for_lambda" { name = "${local.resource_prefix}_iam_for_gql_lambda" assume_role_policy = jsonencode({ Version = "2012-10-17" Statement = [ { Action = "sts:AssumeRole" Effect = "Allow" Sid = "" Principal = { Service = "lambda.amazonaws.com" } }, ] } ) } // create lambda function resource "aws_lambda_function" "gql_lambda" { filename = "./function/function.zip" function_name = "${local.resource_prefix}_simple_gql_lambda_v" role = aws_iam_role.iam_for_lambda.arn handler = "graphql.graphqlHandler" # The filebase64sha256() function is available in Terraform 0.11.12 and later # For Terraform 0.11.11 and earlier, use the base64sha256() function and the file() function: # source_code_hash = "${base64sha256(file("lambda_function_payload.zip"))}" source_code_hash = filebase64sha256("./function/function.zip") runtime = "nodejs14.x" environment { variables = { ddb_table_name = local.dynamo_table_name ddb_hash_key = local.dynamo_hash_key ddb_region = local.region GRAPHQL_ENDPOINT = aws_api_gateway_domain_name.graphql.domain_name SNS_ARN = local.sns_arn OKTA_CLIENT_ID = var.okta_client_id OKTA_CLIENT_SECRET = var.okta_client_secret OKTA_DOMAIN = var.okta_domain OKTA_ISSUER_SUFFIX = var.okta_issuer_suffix OKTA_ISSUER = local.OKTA_ISSUER OKTA_AUDIENCE = var.okta_audience APP_BASE_PORT = 4000 } } tags = merge( local.base_tags, { Name = "${local.resource_prefix}_simple_gql_lambda_v" directory = basename(path.cwd) }, ) } resource "aws_lambda_permission" "apigw" { statement_id = "AllowAPIGatewayInvoke" action = "lambda:InvokeFunction" function_name = aws_lambda_function.gql_lambda.function_name principal = "apigateway.amazonaws.com" # The "/*/*" portion grants access from any method on any resource # within the API Gateway REST API. source_arn = "${aws_api_gateway_rest_api.gql.execution_arn}/*/*" }Testing React and GraphQL

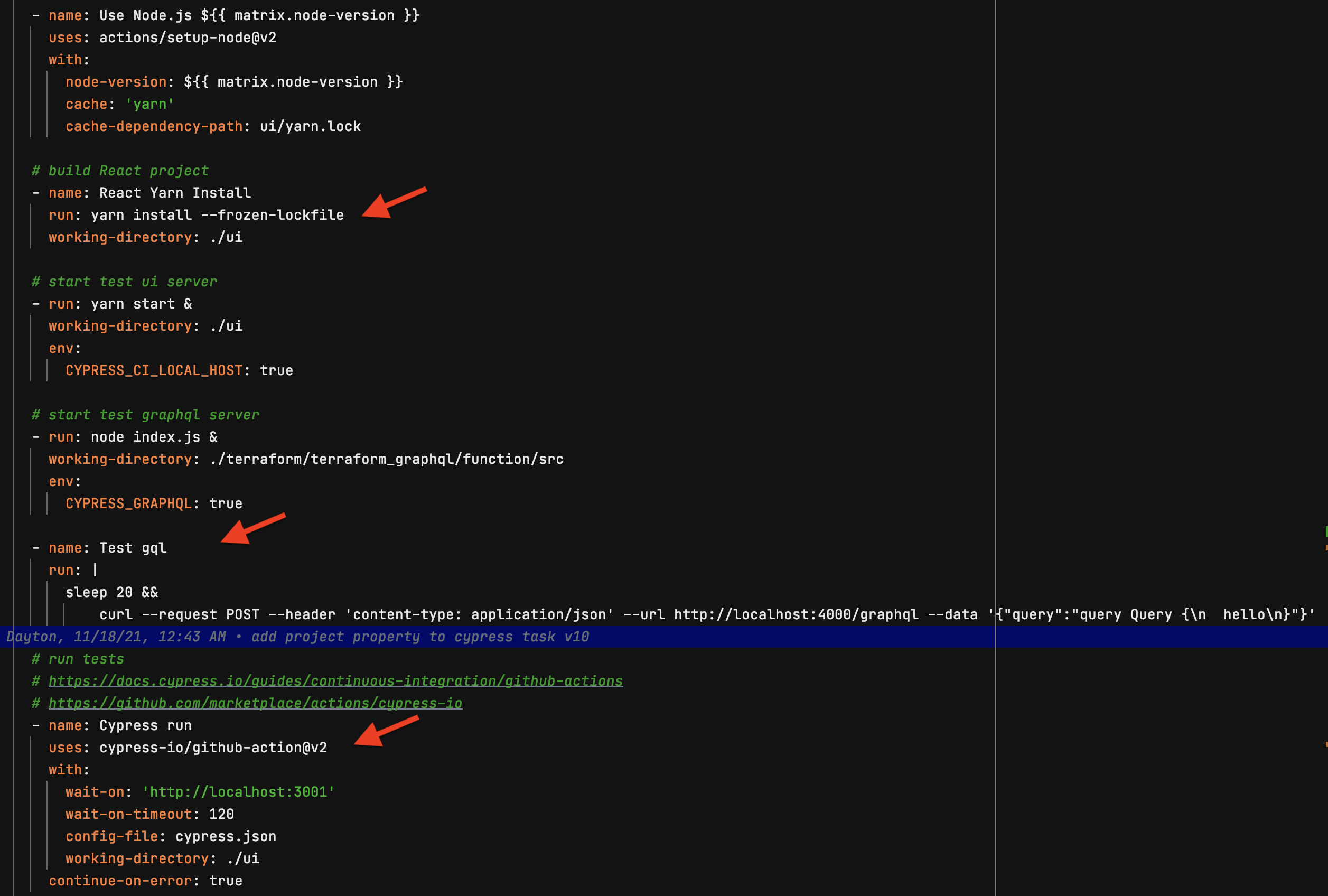

We need to build automated testing into our code, even if you start small. My favorite testing tool is Cypress.io and I like to use a docker-compose file to set up a local development environment of networked containers that have my local code mounted as directories. This allows me to continuously build and test with a stable environment that should be the same no matter where I run it. Cypress provides both a desktop tool, where you can watch tests run in real time and observe the state of the browser as it was at any time during the test, and a command line utility that easily integrates into your favorite pipleline tool.

Compose File

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46version: "3.9" # optional since v1.27.0 services: # UI container - uses command from package.json file to start # need to install nodemon react: image: node:16 command: /bin/bash -c "ls & npm install nodemon -g && yarn start:nodemon" ports: - "3001:3001" volumes: - ./ui:/src working_dir: /src # This variable is used in App.js, if it exists then it points to this endpoint # with docker network the service name (graphql) is all we need to route # ui should be running at http://localhost:3001/ environment: DOCKER_GRAPHQL_ENDPOINT: graphql dynamodb: image: amazon/dynamodb-local:1.17.0 ports: - "8000:8000" # gives container a drive to mount volumes: - ./terraform/terraform_graphql/function/dynamodb:/home/dynamodblocal/data working_dir: /home/dynamodblocal graphql: image: node:16 command: /bin/bash -c "ls & npm install nodemon -g && nodemon index.js" depends_on: - dynamodb ports: - "4000:4000" volumes: - ./terraform/terraform_graphql/function/src:/src - $HOME/.aws/credentials:/root/.aws/credentials working_dir: /src # graphql should be running at http://localhost:4000/graphql environment: # This value tells graphql to point at local version of dynamodb if it exists CYPRESS_GRAPHQL: "true" GRAPHQL_PORT: 4000 # Tells graphql to ignore the need for authentication JWT_OVERRIDE: "true" # points to service running above DYNAMO_HOST: dynamodbHere the pipeline runs Cypress tests that cover ui and graphql

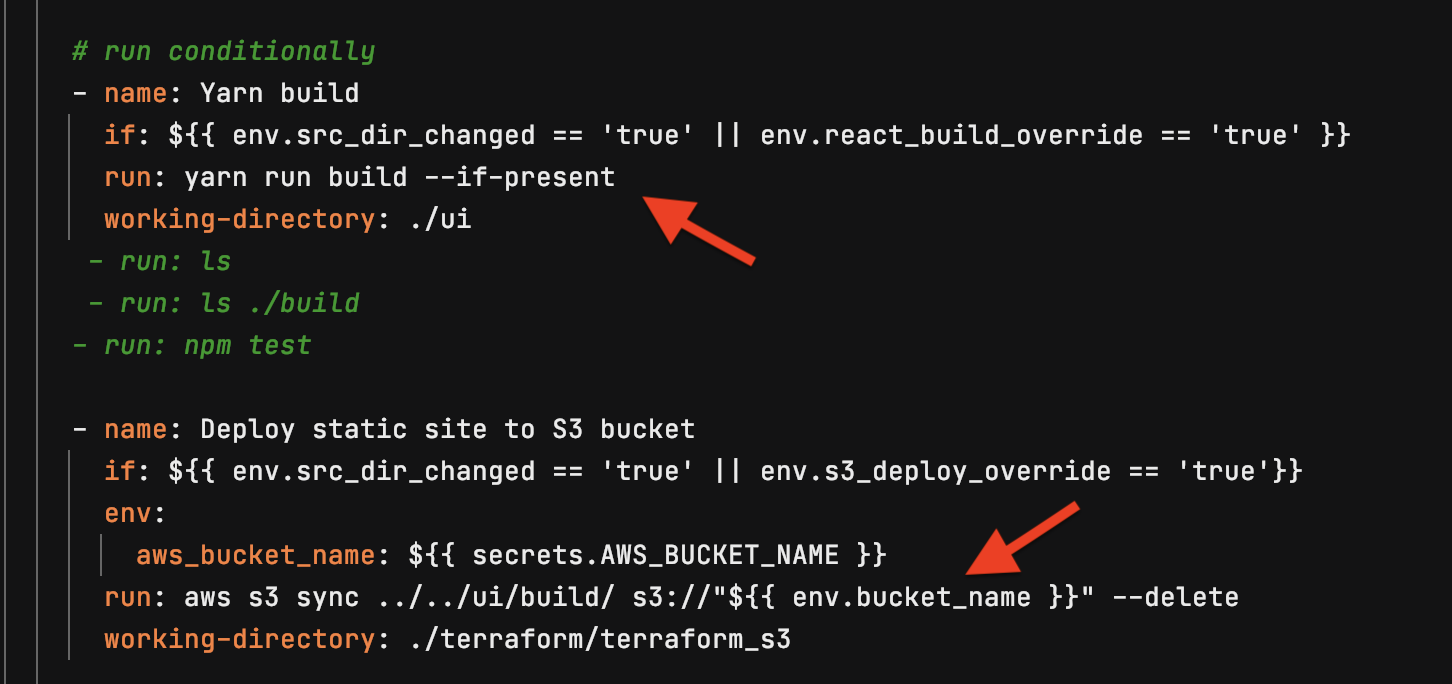

This part builds the React project and uploads it to S3 bucket:

This conditionally updates the Cloudfront distribution if Terraform files have changed: